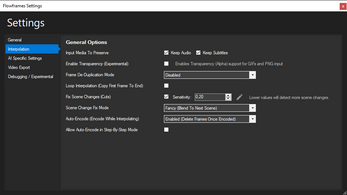

Flowframes - Fast Video Interpolation for any GPU

A downloadable tool for Windows

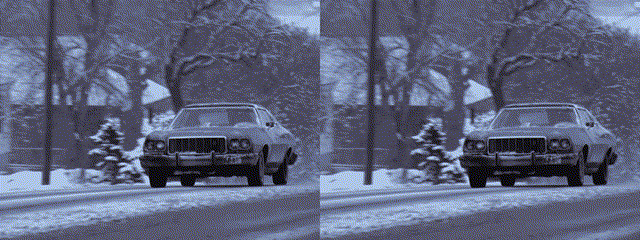

Flowframes is a simple but powerful app that utilizes advanced AI frameworks to interpolate videos in order to increase their framerate in the most natural looking way possible.

The latest versions are exclusive to Patreon for a while - The itch.io version is not the latest! The free version is currently 1.36.0.

Some of the features that are currently exclusive to Patreon include:

- Newer AI models with faster speeds and higher quality

- Real-time output mode

- VapourSynth implementation (no need to extract video frames for interpolation)

- Video and audio encoding improvements

- More Quality of Life features

Features:

- Focused on the RIFE AI, but also supports DAIN and experimental FLAVR, XVFI

- Also supports AMD GPUs via NCNN/Vulkan (for RIFE, DAIN)

- Includes everything that's needed, no need to install additional software

- Can read MP4, GIF, WEBM, MKV, MOV, BIK, and more video formats

- Can also take image sequences as PNG, JPEG and more as input

- Output as video (MP4/MKV/WEBM/MOV), GIF, or frames

- Supports advanced video codecs such as H265/HEVC, VP9, AV1 for export

- Preserve all audio and subtitles from the input video with no quality loss

- Built-in Frame De-Duplication and speed compensation (for 2D animation, etc)

- Scene Detection to avoid artifacts on scene cuts

- ...and more!

If you need any help or have questions, join the Discord:

Minimum System Requirements:

- Vulkan-capable Graphics Card (anything younger than 6 years should work)

- Windows 10/11 (Win 7 might work but is NOT officially supported in any way)

Older GPUs like the GT 710 or Intel HD 3xxx/4xxx will NOT work!

Credits:

RIFE: https://github.com/hzwer/Arxiv2020-RIFE

RIFE-NCNN: https://github.com/nihui/rife-ncnn-vulkan

DAIN-NCNN: https://github.com/nihui/dain-ncnn-vulkan

FLAVR: https://github.com/tarun005/FLAVR

XVFI: https://github.com/JihyongOh/XVFI

Car Demo Video: The Philadelphia Experiment (1984)

Gawr Gura Dance Demo GIF: https://reigentei.booth.pm/items/2425521

| Status | In development |

| Category | Tool |

| Platforms | Windows |

| Rating | Rated 4.6 out of 5 stars (110 total ratings) |

| Author | N00MKRAD |

| Tags | ai, artificial-intelligence, video |

Download

Click download now to get access to the following files: